| In the last decade, AI has achieved great breakthroughs and has transformed a number of industries, e.g., robotics, self-driving cars, healthcare, etc. Our goal is to explore new paradigms for AI and intelligent computing from the perspective of hardware. Specifically, we are exploring the hardware acceleration of AI algorithms, e.g., neural networks, based on digital logic, FPGAs, resistive RAM (RRAM), silicon photonic components, etc. Open PhD positions can be found under “Open PhD Positions”. |

Welcome!

Welcome to Hardware for Artificial Intelligence Group!

Latest News

-

![]()

![]()

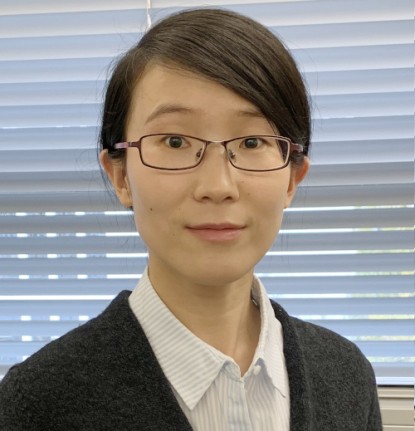

Prof. Dr.-Ing. Grace Li Zhang received the ERC Starting Grant (1.5 million EURO)

Prof. Dr.-Ing. Grace Li Zhang received the ERC Starting Grant (1.5 million EURO). Congratulations!

-

One paper has been accepted by ICRL2025. Congratulations!

The title of the paper is “Basis Sharing: Cross-Layer Parameter Sharing for Large Language Model Compression”

-

![]()

![]()

One paper was accepted by DATE2025.

The title is “CorrectBench: Automatic Testbench Generation with Functional Self-Correction using LLMs for HDL Design"

-

![]()

![]()

Two papers were accepted by MLCAD2024.

The titles are “AutoBench: Automatic Testbench Generation and Evaluation Using LLMs for HDL Design” and “Automated C/C++ Program Repair for High-Level Synthesis via Large Language Models”.

-

One paper was accepted by ICCAD 2024.

The title of this paper is “BasisN: Reprogramming-Free RRAM-Based In-Memory-Computing by Basis Combination for Deep Neural Networks”.

-

![]()

![]()

One paper was accepted by AICAS2024.

-

![]()

![]()

Five papers were accepted by Design Automation and Test in Europe (DATE) 2024. Two papers were nominated as Best Paper Award!